My preferred way of browsing is via the forum kind of applications, such as reddit or hacker news, so at that point I'm not really using a browser. However, the majority of the content is delivered from websites so you see and interesting title, tap on it, and the in-app browser or the main browser is opened. This typically works fine, until the site you're accessing is a megalith and takes tens of seconds to load. After at most 3 seconds, if barely any content has loaded, the link is forgotten and I move on the the next link. That's it.

The problem is that these websites are offering too many features for them to be practical on a smartphone. Sometimes websites take even longer because they need to load the comments section, then come the suggested posts, with ultra big resolution images, followed by the author's biography... It's unnecessary, I just want to see content.

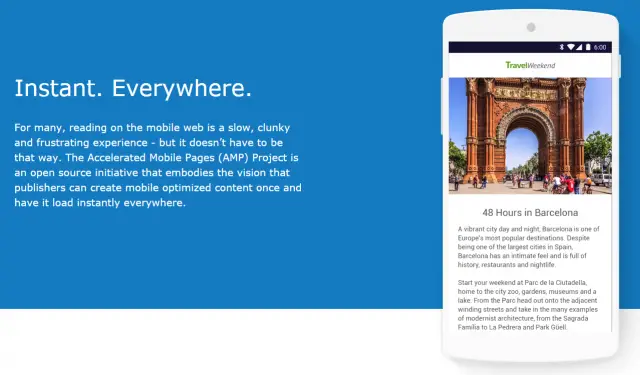

A team of internet companies, including Google, have come up with Accelerated Mobile Pages (AMP). It is primarily a technological development (not exactly unheard of, as we'll see), but through its restrictions it tries to limit the amount of unnecessary crap on pages. As I said, it's a development, however much of this development is in terms of standards and rules rather than faster networks, or something like that.

In fact ,the focus is on basically banning a whole bunch of heavy and also some outdated HTML elements. Unsurprisingly, no more <applet>, no more <frame> and no more <embed>. There are also strict limitations on JavaScript, however the most surprising (but great) banned elements are <input> and <form> (with the exception of <button>). It may not directly impact immediate performance of HTML, but it will surely stop developers from adding useless "post a comment" forms.

The focus is primarily on immediate content. If I get a link while chatting and I open it up, I don't have more than 3 seconds to read the title and move back to the chat. Thankfully, on Android, this experience shall now improve with the new chrome tabs introduced in Marshmallow. It's a technical thing, but basically it avoids having to use either an in-app browser (which is isolated from your standard chrome) or opening up chrome (which is slow).

|

| Chrome tabs are much faster, at least in this demo (via Ars Technica) |

But let's get back to AMP. As I said, it is content that the majority wants, so in this age of platform webapps, single-page sites and all the rest, simplicity, again, trumps features. Despite the lack of features, static areas of a website are hugely important. If you're interested, here's a short how-to, however it is fair to note that static this time is mostly client side, so no JavaScript - which means you'll probably need server-side processing if you have "dynamic" content.

AMP avoids the common JavaScript the web is used to and realises the idea of Web Components. These do have JavaScript under the hood, but since they are managed differently, it makes the page load faster without synchronous blocks by JavaScript. AMP also restricts inline styling, conditional comments and some CSS attributes (although CSS is not so limited compared to JS).

As yet, (being days or hours since being announced) I personally do not consider this as a major breakthrough technologically - it's only a set of rules to reduce the bloat on webpages who primarily host content. However, I am very glad with the way things are going and I do hope it gains traction.

The benefits I see are greatly improved user experience with much faster load times and no nonsense web pages along with better development. The more modular the pages, due to web components, the easier it is to develop. There are no messy inline styles or randomly placed JavaScript. Things are put in their place and the rules are strict - otherwise you'll not qualify for AMP and your page won't make it to the top of search results.

Unfortunately, I don't have that much control on this blog, otherwise I would have AMP'd it right away!

For further details, there are quite some resources: